Regionalization operators#

Here we introduce a pre-regionalization operator \(\mathcal{F}_{R}\) enabling us to hypothesize a relationship between physiographic descriptors \(\boldsymbol{D}\) and the hydrological model parameters \(\boldsymbol{\theta}\) such that:

with \(\boldsymbol{D}\) the \(N_D\)-dimensional vector of physiographic descriptor maps covering \(\Omega\), and \(\boldsymbol{\rho}\) the vector of tunable regionalization parameters that is defined depending upon the following two types of pre-regionalization operators.

Multivariate polynomial regression#

A multivariate polynomial regression operator \(\mathcal{P}\) that for the \(k^{th}\)-parameter of the forward hydrological model writes:

with \(s_{k}(z)=l_{k}+(u_{k}-l_{k})/\left(1+e^{- z}\right),\,\forall z\in\mathbb{R}\), a transformation based on a sigmoid function with values in \(\left]l_k;u_k\right[\), thus imposing bound constrains in the direct hydrological model such that \(l_{k}<\theta_{k}(x)<u_{k},\,\forall x\in\Omega\). The regional control vector in this case is:

Artificial neural network#

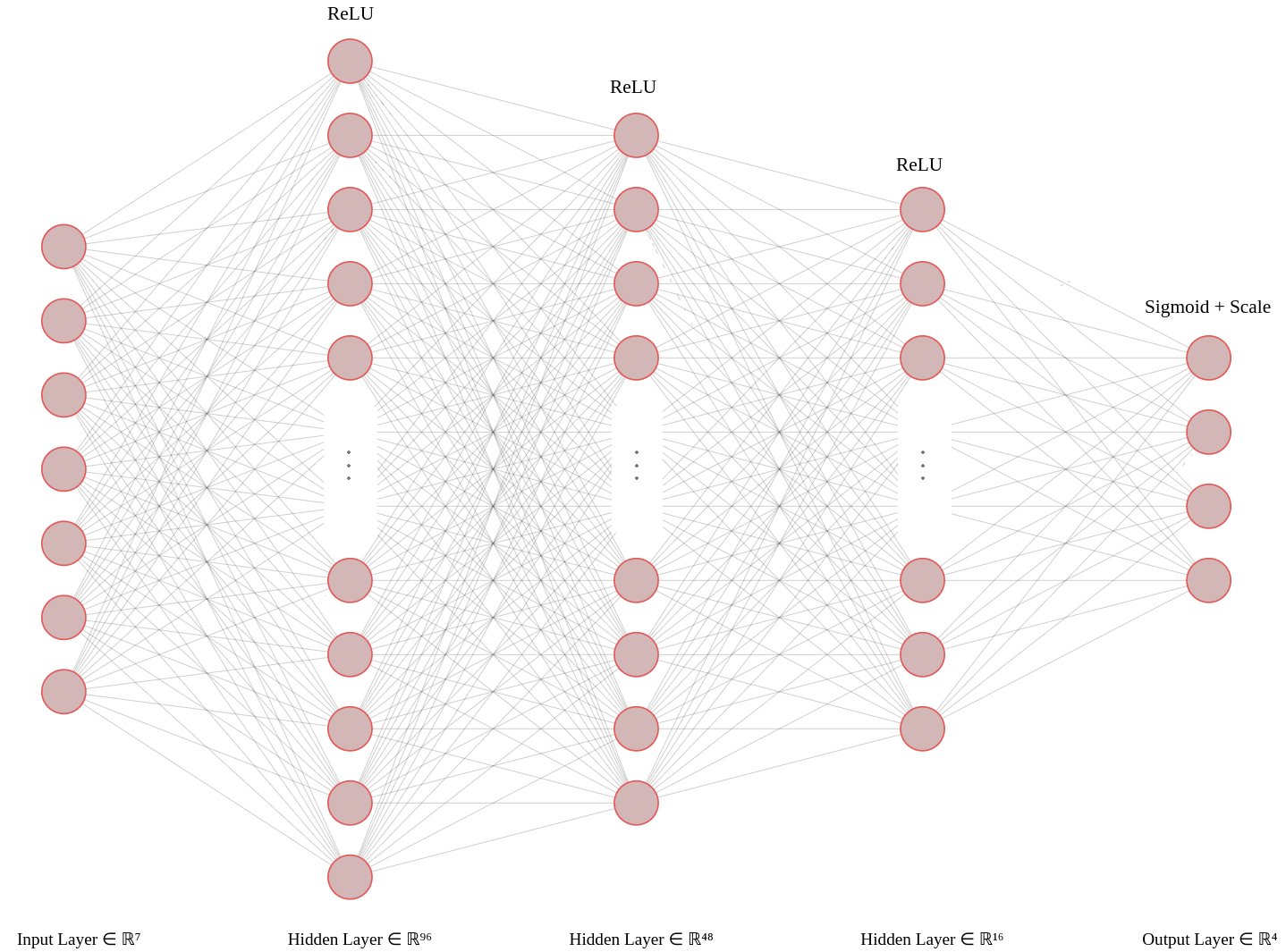

An artificial neural network (ANN) denoted \(\mathcal{N}\), consisting of a multilayer perceptron, aims to learn the descriptors to parameters mapping such that:

where \(\boldsymbol{W}\) and \(\boldsymbol{b}\) are respectively weights and biases of the neural network composed of \(N_L\) dense layers. Note that an output layer consisting in a transformation based on the sigmoid function enables to impose \(l_{k}<\theta_{k}(x)<u_{k},\,\forall x\in\Omega\), i.e. bounds constrains on the \(k^{th}\)-hydrological parameters. The regional control vector in this case is:

The following figure illustrates the architecture of the ANN with three hidden layers, followed by the ReLU activation function, and an output layer that uses the Sigmoid activation function in combination with a scaling function. In this particular case, we have \(N_D=7\) and \(N_{\theta}=4\).